La Noir Funny Read Face Video

Throughout the history of video games, the industry has had an inferiority complex when compared to Hollywood. And each year some developers create games that are more serious and more story-oriented, as if to say, "See? We aren't just kids' toys? We can be Art too!" Last year, Heavy Rain took up that cinematic mantle. This year, it will be L.A. Noire.

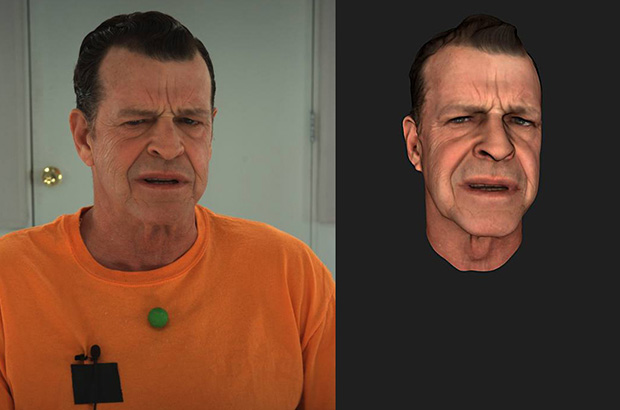

Made by Team Bondi and Rockstar–the AAA developer behind the violent and cinematic Grand Theft Auto series–L.A. Noire is set in post-WWII Los Angeles, giving the player the role of Cole Phelps (Mad Men's Aaron Staton), a war-hero turned police detective. The game features a detailed L.A. that stretches for 8 square miles, and over 400 characters that have all been motion captured by actors. But Bondi took things further with a technology called MotionScan, that captures the actors' heads, producing realistic (and recognizable) faces with believable expressions–the better for the player-turned-cop to determine who's lying about a crime. I talked to Brendan McNamara, the head of Team Bondi and L.A. Noire's writer and director, about the technology.

Kevin Ohannessian: What was the reason Rockstar developed the MotionScan tech?

Brendan McNamara: MotionScan technology was created by Depth Analysis, a tech studio and sister company to Team Bondi. We knew we wanted to make a detective thriller, and existing technologies have come a long way in creating very realistic objects and backgrounds, but we felt like the current facial motion capture technologies did not do so well in realistically capturing a performance. MotionScan was built to address that challenge and provide an experience that plays an essential role in L.A. Noire, allowing players to carefully analyze characters and determine whether they are lying or telling the truth. With performances that are captured with MotionScan, what you see is what you get, so there is literally no difference between what our actors have captured in the studio and what our players are interacting with in the game.

How has using MotionScan changed the way you write a game, direct a game?

MotionScan has made the post-production process become much more streamlined and efficient. The technology directly transfers the actor's performance into the game engine, so very little post-processing work is needed. That means we can free up artists and designers to focus on other aspects of the game, as well as pay more attention to fine-tuning things from a storytelling standpoint. We can place a lot more emphasis on working with actors to provide incredibly nuanced and believable performances, and from a director's point-of-view, we can frame scenes with essentially as much precision as film making. From the actor's perspective, they can create a character and see the direct results, which is something that can be lost sometimes in traditional voice-over work found in most games.

The rig uses 32 cameras: what is there exact distribution, and why are so many needed?

Our MotionScan rig utilizes 32 HD cameras that are placed around the floor, ceiling and eye-level of an actor, positioned at various distances around an actor's head to create a 360-degree image. In a way, it's similar to the "panorama stitch" feature on digital cameras where individual pictures are combined to create one massive image. For MotionScan, the rig composes a 3-D mesh around the face so every angle and movement can be captured. With so many different angles and details found on the human head, we needed 32 cameras to produce a true-to-life capture that doesn't miss a single detail in every performance.

After the camera rig puts all of the footage into the software, what happens then? What kind of compositing is done? And how much work is needed after that to get it into the game?

For a game there is no compositing as such. Once capture is complete a frame range is selected for processing. Then a resolution is defined. For games this is a lot lower than the native 2K x 2K resolution that the system captures. Once the frame range and resolution is selected the data is sent to a blade cluster and processed into a 3-D file. This file can either be in the Motionscan native format for game engines or for an art package such as Maya or Max. The data file for the head is then parented to the neck joint of the character in the game or rendering package.

The data is resolution independent–it can be played back at any resolution from 2K x 2K downwards. This is up to the design of the game and how much RAM the game needs to allocate to this task. In L.A. Noire its 30MB for three people talking on screen. The playback of the data can also be varied based on the distance from the camera so less triangles and texture samples can be used when the face is smaller on screen.

Do you believe such tech will let games compete more with film?

I believe that MotionScan/L.A. Noire will go a long way in bringing new audiences into games because of the realism it offers. Even as the film industry continues to advance new technologies, they are delivering content to a passive audience that cannot change what they see on the screen. With L.A. Noire, we're combining interactivity into an experience that is also very believable and immersive. We hope this approach will go a long way in making L.A. Noire appeal to the widest audience possible, whether they be experienced gamers, casual observers, noir film buffs, or anything in-between.

And what are the plans for MotionScan beyond L.A. Noire? Will it be used in other Rockstar games or TakeTwo games? Licensed out to other companies?

We have a number of other customers who are either currently using Motionscan in new games or are evaluating the technology for upcoming projects. There has obviously been a lot of interest from the film business as well. We are certainly excited about MotionScan and its future potential. For now, however, the focus is on completing L.A. Noire and getting it into players hands on May 17.

lalibertedoorguichat.blogspot.com

Source: https://www.fastcompany.com/1724050/game-face-la-noire-brings-actors-full-performance-gaming

0 Response to "La Noir Funny Read Face Video"

Postar um comentário